In recent years, marketers have flocked to AI—but their audiences aren’t quite sold.

Martin Sorrell, founder of the global WPP advertising empire, summarized AI’s timeline perfectly. He referred to 2023 (the arrival of Chat GPT) as the year of “wow!”, 2024 as the year of “how?”, and 2025 as the year of “now!”

He’s spot-on. For the past three years, email marketers have surfed the artificial intelligence wave with increasing confidence. AI is powering more seductive subject lines, compelling copy, perfect product selections, and superior split testing—which are all converging to deliver more engaged customers who can’t wait to open their wallets.

The big catch is that many of those customers don’t want their marketing coming from machines—they’d rather deal with real people.

Subscriber concerns about AI

In a recent survey, we asked over 1,000 consumers if they’re more or less likely to trust marketing emails they knew were written by AI.

Two in five said they are “somewhat less” or “much less” likely to trust these messages.

However, there are some curious demographic variances.

Male respondents are almost 50 percent more likely to trust AI-generated messages than female respondents. Households with children living at home are far more trusting of AI content than those without (41 percent vs 11 percent). Perhaps the kids are teaching the parents!

Individuals with degree-level education are also more trusting of machine-sourced messages.

Of course, the ability to identify AI-authored content is another matter altogether. Only 39 percent of our respondents were either “slightly confident” or “very confident” they could spot these messages.

Why the negative feelings towards AI? I think it’s a mix of practical and emotional concerns. Let me summarize a few:

What are brands really doing with my personal data?

AI can sometimes process subscribers’ personal data in ways they didn’t anticipate when they first gave consent. For instance, they may have expected their purchase history to be analyzed, but not that their responsiveness to different tones of language would also be assessed. And with the arrival of new-generation privacy laws like GDPR and CCPA, consumers are far more aware of their data privacy rights.

They expect that brands will collect personal data for specified purposes and not further process it in a manner incompatible with those purposes. Data processing must be fair, lawful, and transparent. If consumers believe AI-driven processing crosses those lines, they’ll push back on its use. And even if brands are technically compliant with relevant regulations, the perception of misuse can damage brand trust and hurt engagement.

That’s why brands must lead with transparency and proactively show that their use of AI respects both the letter and spirit of privacy expectations.

How do I know I can trust AI-authored messages?

Almost three years ago, I wrote an article predicting a downside to the AI revolution—that it would help fraudsters become more effective. This is now reality, and scam emails are near-indistinguishable from legitimate ones. Email subscribers are understandably nervous. If mailbox providers struggle to identify malicious messages, how can individual consumers ever hope to do so?

To help address these concerns, email marketers should take two important steps:

- Update DMARC policies from p=none to either p=quarantine or p=reject. This will ensure that emails failing authentication checks are automatically placed in spam/junk or deleted on sight, limiting subscribers’ exposure to these harmful messages.

- Implement BIMI and Apple Branded Mail so senders’ authorized logos display next to their emails in subscribers’ inboxes when DMARC passes. This will boost recognition and trust, especially if subscribers are educated about why these features are important.

I prefer dealing with human beings!

We can’t overstate the importance of applying the human touch to marketing messages.

Consumers are getting better at spotting AI-generated content—aided by inconsistencies in tone, style, vocabulary, and occasional “weirdness” in images. While AI provides powerful tools for creating subject lines and email copy, marketers should have guardrails in place to maintain brand consistency and tone of voice.

Who really benefits?

A key question remains: who truly benefits from brands using AI? Consumers know that brands gain value from their personal data—often amplified by AI. Most are comfortable with this, as long as they feel they’re receiving comparable value in return. That value might be financial (discounts, special offers) or non-financial (useful content, supporting meaningful causes).

When the values exchange feels balanced, the brand-customer relationship stays healthy. However, if brands are extracting disproportionate value by using AI (or customers feel like they are), customers may expect increased benefits too!

These concerns mean brands must become far more intentional about informing their customers how AI is used in their marketing communications.

Here’s my checklist for making this happen:

- Assess risk levels

The European Union’s AI Act requires businesses to categorize their AI usage by level of risk, ranging from “minimal,” through “limited,” all the way up to “high,” and “unacceptable.”

In the latter case, we mean AI tools that negatively impact people’s lives or cause actual harm (for example, a betting company that uses AI to drive uncontrolled gambling). For non-EU businesses, similar laws are on the way in Canada, China, Japan, the UK, and the US, so now is a good time to get ahead of the curve.

- Update privacy policies

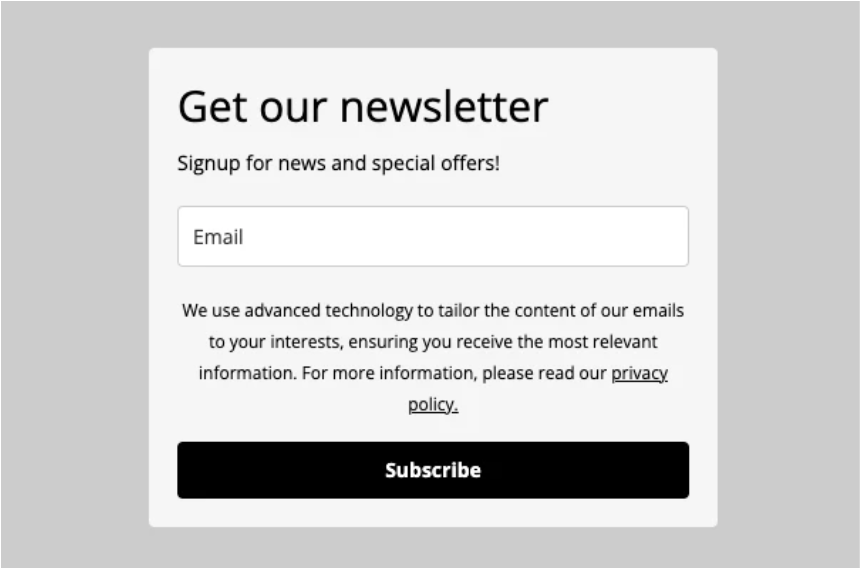

Sharing privacy policy information should start at the point of subscriber signup. We’d also encourage email programs to advise new subscribers if AI will process their personal data, as Mailerlite highlighted below.

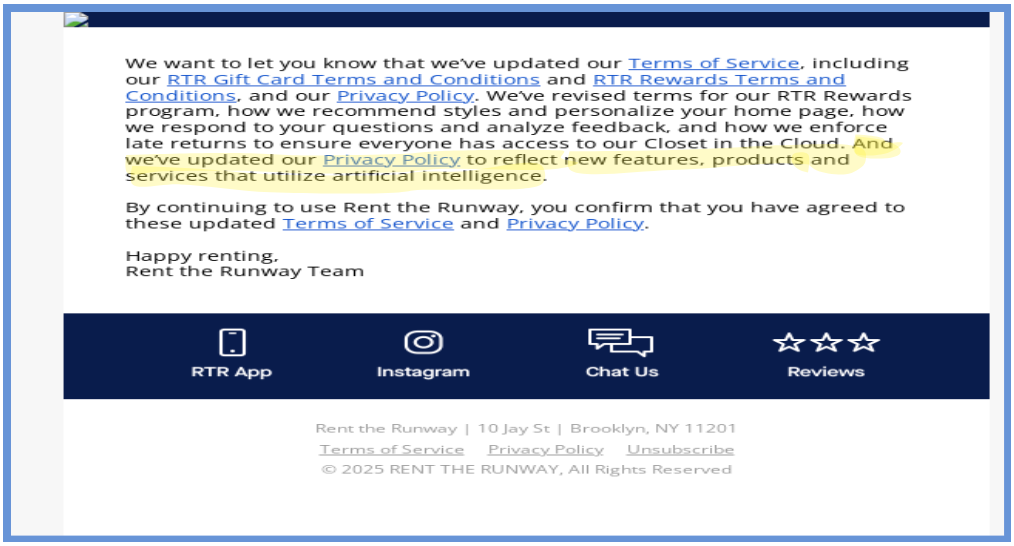

Provide information in privacy policies about how AI will be applied and what tools will be used. Ideally, consider introducing a separate AI policy in the same way that some businesses have standalone policies for cookies, accessibility, corporate responsibility, etc. Brands like Rent the Runway now explicitly refer to AI in their privacy policy, as referenced below.

- Update user preferences

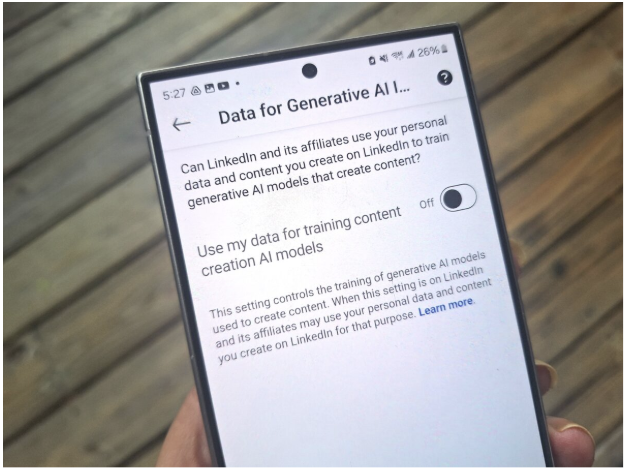

Using AI may change the preferences subscribers believe they originally selected. These could include increased mailing frequency, changes in content, and new interest categories. Marketers should update their preference centers to let subscribers control their exposure to these changes, and even to opt out altogether from receiving AI-generated messaging, as LinkedIn members can in the image below.

- Practice data minimization

In short, don’t collect any data you won’t use. Collect and process only data that is adequate, relevant, and limited to achieve a specific purpose. While this is often a legal principle, it’s also an ethical one. If you are using AI tools, make certain they only collect data users have opted to provide. Ensure your customers’ data is used responsibly and aligns with the consent they originally provided

- Revisit the legal basis for data processing

In some countries, data processing laws also require businesses to select a legal basis for processing personal data. “Legitimate interest” and “Consent” are the most common bases. However, where personal data is deemed to be sensitive, explicit consent is required. There is a plausible scenario whereby AI processes non-sensitive personal data to a level where it becomes sensitive. Should this be the case, businesses will need to revisit the legal basis they rely on and possibly strengthen the consent they obtain.

Bringing transparency and emotional intelligence into Q4 and beyond

As we approach the 2025 holiday season, what are the key learnings? In short, I’d say display a solid dose of emotional intelligence. While you’re excited about AI saving you time and delivering more effective marketing, your customers may be nervous about how you are using their personal data and how this may impact them.

Be transparent, empathetic, and make sure both parties benefit. Email’s success is founded on trust, and these are the building blocks!

Looking for more info on AI’s impact on the email marketing space? We had a fantastic conversation with a panel of email experts breaking down AI and other pain points from the Litmus State of Email 2025 report on our webinar series, State of Email Live.